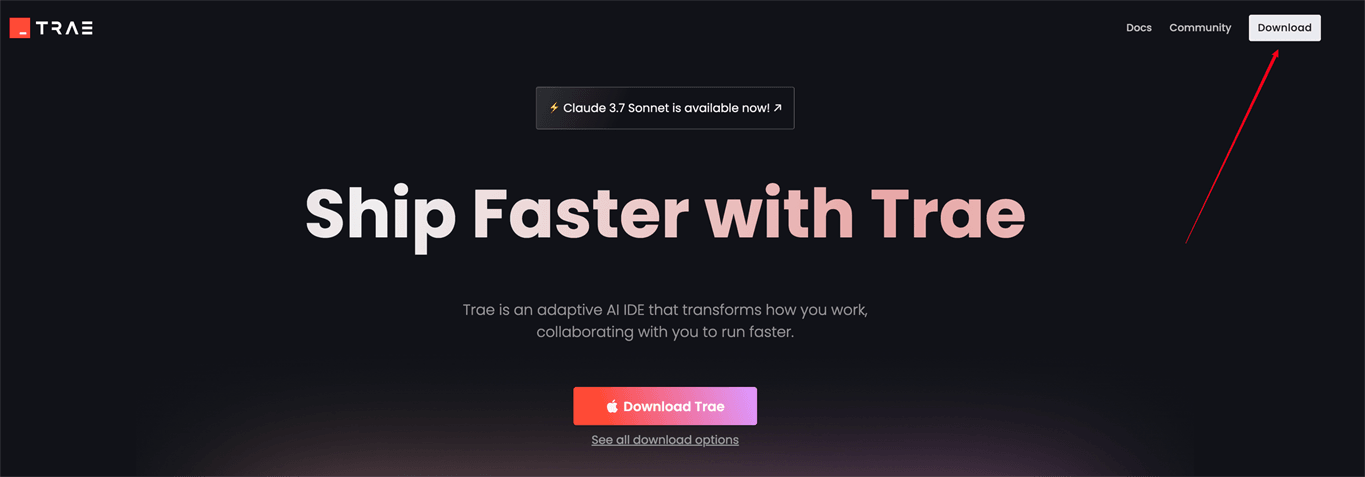

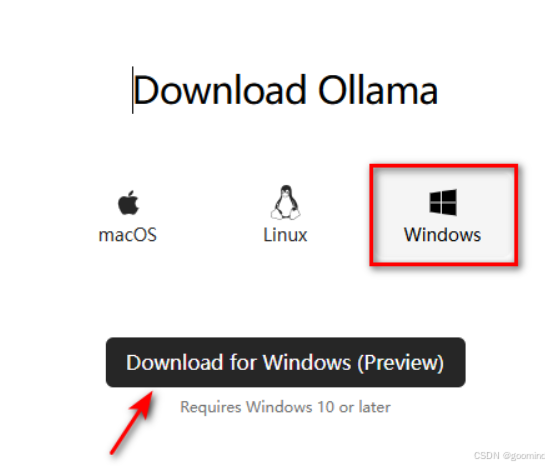

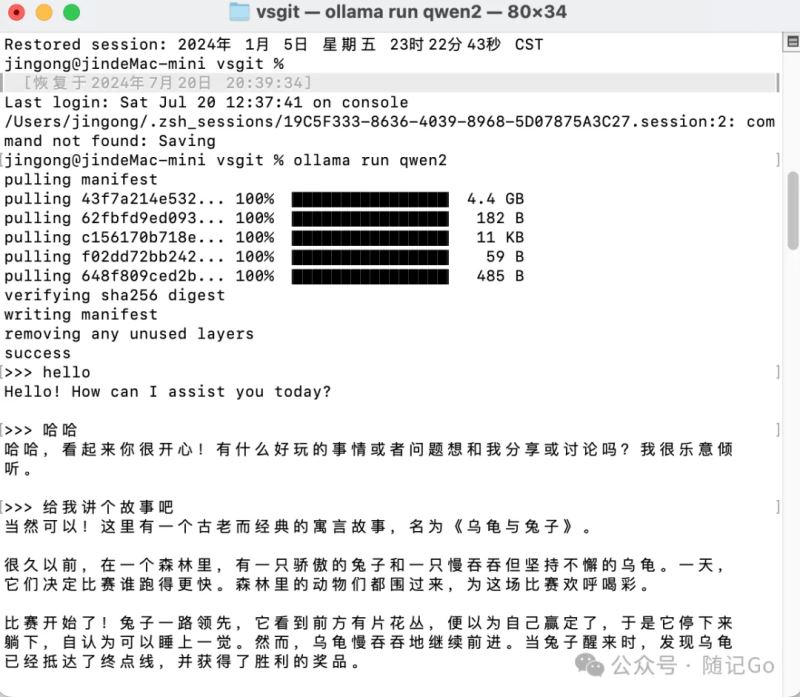

1、下载ollama

1)https://ollama.com进入网址,点击download下载2)下载后直接安装即可。

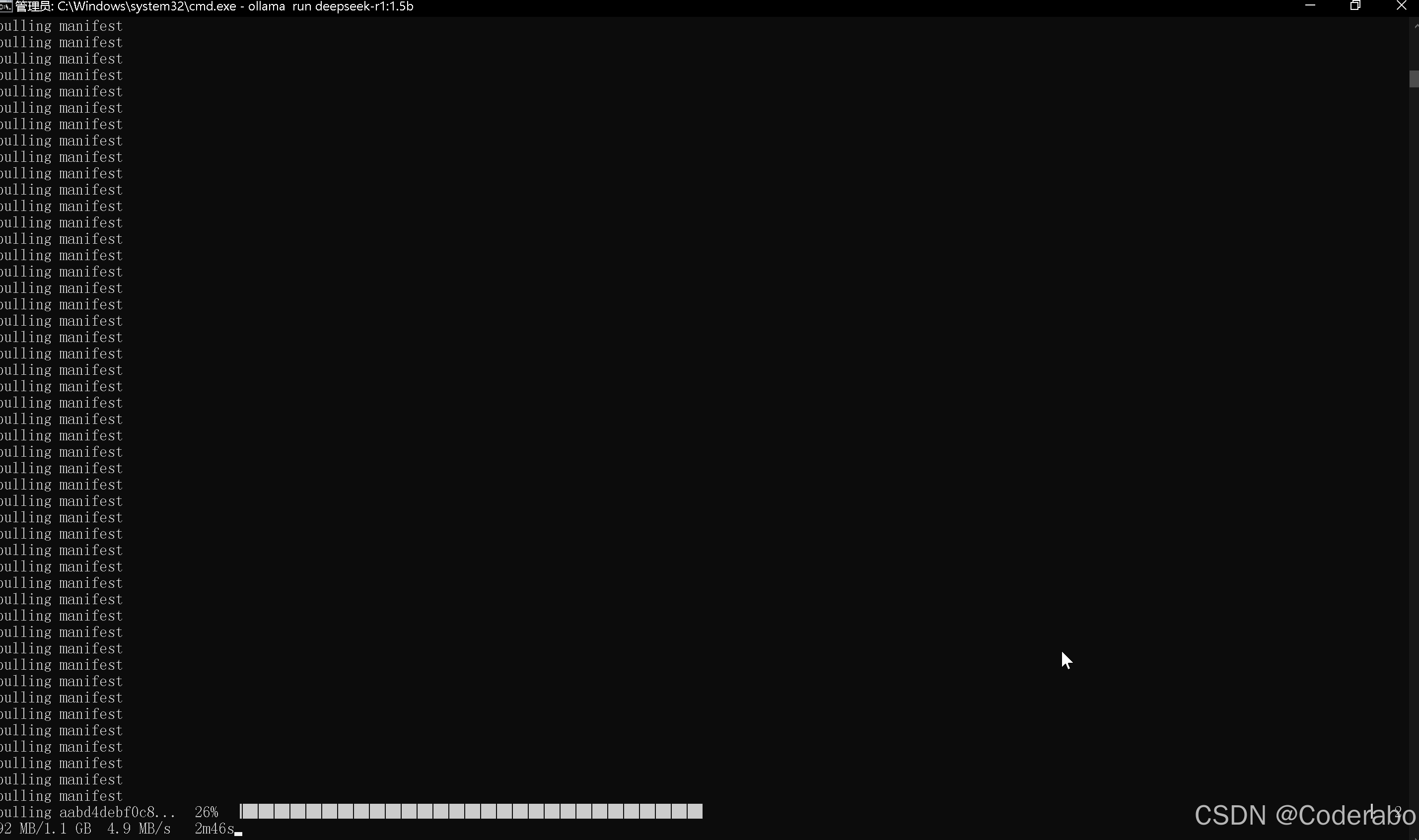

2、启动配置模型

默认是启动cmd窗口直接输入

|

1 |

ollama run llama3 |

启动llama3大模型或者启动千问大模型

|

1 |

ollama run qwen2 |

启动输入你需要输入的问题即可

3、配置UI界面

安装docker并部署web操作界面

|

1 |

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui --restart always ghcr.io/open-webui/open-webui:main |

安装完毕后,安装包较大,需等待一段时间。localhost:3000即可打开网址

4、搭建本地知识库

AnythingLLM

5、配置文件

开发11434端口,便于外部访问接口,如果跨域访问的话配置OLLAMA_ORIGINS=*

Windows版

只需要在系统环境变量中直接配置,

OLLAMA_HOST为变量名,"0.0.0.0:11434"为变量值

|

1 |

OLLAMA_HOST= "0.0.0.0:11434" |

MAC版

配置OLLAMA_HOST

|

1 |

sudo sh -c 'echo "export OLLAMA_HOST=0.0.0.0:11434">>/etc/profile'launchctl setenv OLLAMA_HOST "0.0.0.0:11434" |

Linux版

配置OLLAMA_HOST

|

1 |

Environment="OLLAMA\_HOST=0.0.0.0" |

6、程序调用接口

golang实现例子:流式响应速度更快,用户体验更佳。

golang例子:非流式响应

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 |

package main import ( "bufio" "bytes" "encoding/json" "fmt" "io/ioutil" "net/http" "os" "strings" "time" ) const ( obaseURL = "http://localhost:11434/api" omodelID = "qwen2:0.5b" // 选择合适的模型 oendpoint = "/chat" //"/chat/completions" ) // ChatCompletionRequest 定义了请求体的结构 type olChatCompletionRequest struct { Model string `json:"model"` Messages []struct { Role string `json:"role"` Content string `json:"content"` } `json:"messages"` Stream bool `json:"stream"` //Temperature float32 `json:"temperature"` } // ChatCompletionResponse 定义了响应体的结构 type olChatCompletionResponse struct { //Choices []struct { Message struct { Role string `json:"role"` Content string `json:"content"` } `json:"message"` //} `json:"choices"` } // sendRequestWithRetry 发送请求并处理可能的429错误 func olsendRequestWithRetry(client *http.Client, requestBody []byte) (*http.Response, error) { req, err := http.NewRequest("POST", obaseURL+oendpoint, bytes.NewBuffer(requestBody)) if err != nil { return nil, err } req.Header.Set("Content-Type", "application/json") //req.Header.Set("Authorization", "Bearer "+apiKey) resp, err := client.Do(req) if err != nil { return nil, err } if resp.StatusCode == http.StatusTooManyRequests { retryAfter := resp.Header.Get("Retry-After") if retryAfter != "" { duration, _ := time.ParseDuration(retryAfter) time.Sleep(duration) } else { time.Sleep(5 * time.Second) // 默认等待5秒 } return olsendRequestWithRetry(client, requestBody) // 递归重试 } return resp, nil } func main() { client := &http.Client{} // 创建一个全局的 HTTP 客户端实例 // 初始化对话历史记录 history := []struct { Role string `json:"role"` Content string `json:"content"` }{ {"system", "你是一位唐代诗人,特别擅长模仿李白的风格。"}, } // 创建标准输入的扫描器 scanner := bufio.NewScanner(os.Stdin) for { fmt.Print("请输入您的问题(或者输入 'exit' 退出): ") scanner.Scan() userInput := strings.TrimSpace(scanner.Text()) // 退出条件 if userInput == "exit" { fmt.Println("感谢使用,再见!") break } // 添加用户输入到历史记录 history = append(history, struct { Role string `json:"role"` Content string `json:"content"` }{ "user", userInput, }) // 创建请求体 requestBody := olChatCompletionRequest{ Model: omodelID, Messages: history, Stream: false, //Temperature: 0.7, } // 构建完整的请求体,包含历史消息 requestBody.Messages = append([]struct { Role string `json:"role"` Content string `json:"content"` }{ { Role: "system", Content: "你是一位唐代诗人,特别擅长模仿李白的风格。", }, }, history...) // 将请求体序列化为 JSON requestBodyJSON, err := json.Marshal(requestBody) if err != nil { fmt.Println("Error marshalling request body:", err) continue } fmt.Println("wocao:" + string(requestBodyJSON)) // 发送请求并处理重试 resp, err := olsendRequestWithRetry(client, requestBodyJSON) if err != nil { fmt.Println("Error sending request after retries:", err) continue } defer resp.Body.Close() // 检查响应状态码 if resp.StatusCode != http.StatusOK { fmt.Printf("Received non-200 response status code: %d\n", resp.StatusCode) continue } // 读取响应体 responseBody, err := ioutil.ReadAll(resp.Body) if err != nil { fmt.Println("Error reading response body:", err) continue } //fmt.Println("0000" + string(responseBody)) // 解析响应体 var completionResponse olChatCompletionResponse err = json.Unmarshal(responseBody, &completionResponse) if err != nil { fmt.Println("Error unmarshalling response body:", err) continue } fmt.Printf("AI 回复: %s\n", completionResponse.Message.Content) // choice.Message.Content // 将用户的消息添加到历史记录中 history = append(history, struct { Role string `json:"role"` Content string `json:"content"` }{ Role: completionResponse.Message.Role, Content: completionResponse.Message.Content, // 假设用户的消息是第一个 }) } } |

golang例子:流式响应

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 |

package main import ( "bufio" "bytes" "encoding/json" "fmt" "io" "net/http" "os" "strings" "time" ) const ( obaseURL = "http://localhost:11434/api" omodelID = "qwen2:0.5b" // 选择合适的模型 oendpoint = "/chat" //"/chat/completions" ) // ChatCompletionRequest 定义了请求体的结构 type olChatCompletionRequest struct { Model string `json:"model"` Messages []struct { Role string `json:"role"` Content string `json:"content"` } `json:"messages"` Stream bool `json:"stream"` //Temperature float32 `json:"temperature"` } // ChatCompletionResponse 定义了响应体的结构 type olChatCompletionResponse struct { //Choices []struct { Message struct { Role string `json:"role"` Content string `json:"content"` } `json:"message"` //} `json:"choices"` } // sendRequestWithRetry 发送请求并处理可能的429错误 func olsendRequestWithRetry(client *http.Client, requestBody []byte) (*http.Response, error) { req, err := http.NewRequest("POST", obaseURL+oendpoint, bytes.NewBuffer(requestBody)) if err != nil { return nil, err } req.Header.Set("Content-Type", "application/json") //req.Header.Set("Authorization", "Bearer "+apiKey) resp, err := client.Do(req) if err != nil { return nil, err } if resp.StatusCode == http.StatusTooManyRequests { retryAfter := resp.Header.Get("Retry-After") if retryAfter != "" { duration, _ := time.ParseDuration(retryAfter) time.Sleep(duration) } else { time.Sleep(5 * time.Second) // 默认等待5秒 } return olsendRequestWithRetry(client, requestBody) // 递归重试 } return resp, nil } func main() { client := &http.Client{} // 创建一个全局的 HTTP 客户端实例 // 初始化对话历史记录 history := []struct { Role string `json:"role"` Content string `json:"content"` }{ {"system", "你是一位唐代诗人,特别擅长模仿李白的风格。"}, } // 创建标准输入的扫描器 scanner := bufio.NewScanner(os.Stdin) for { fmt.Print("请输入您的问题(或者输入 'exit' 退出): ") scanner.Scan() userInput := strings.TrimSpace(scanner.Text()) // 退出条件 if userInput == "exit" { fmt.Println("感谢使用,再见!") break } // 添加用户输入到历史记录 history = append(history, struct { Role string `json:"role"` Content string `json:"content"` }{ "user", userInput, }) // 创建请求体 requestBody := olChatCompletionRequest{ Model: omodelID, Messages: history, Stream: true, //Temperature: 0.7, } // 构建完整的请求体,包含历史消息 requestBody.Messages = append([]struct { Role string `json:"role"` Content string `json:"content"` }{ { Role: "system", Content: "你是一位唐代诗人,特别擅长模仿李白的风格。", }, }, history...) // 将请求体序列化为 JSON requestBodyJSON, err := json.Marshal(requestBody) if err != nil { fmt.Println("Error marshalling request body:", err) continue } fmt.Println("wocao:" + string(requestBodyJSON)) // 发送请求并处理重试 resp, err := olsendRequestWithRetry(client, requestBodyJSON) if err != nil { fmt.Println("Error sending request after retries:", err) continue } defer resp.Body.Close() // 检查响应状态码 if resp.StatusCode != http.StatusOK { fmt.Printf("Received non-200 response status code: %d\n", resp.StatusCode) continue } resutlmessage := "" streamReader := resp.Body buf := make([]byte, 1024) // 或者使用更大的缓冲区来提高读取性能 var completionResponse olChatCompletionResponse fmt.Print("AI 回复:") for { n, err := streamReader.Read(buf) if n > 0 { // 处理接收到的数据,这里简单打印出来 //fmt.Print(string(buf[:n])) err = json.Unmarshal(buf[:n], &completionResponse) fmt.Print(string(completionResponse.Message.Content)) resutlmessage+=string(completionResponse.Message.Content) if err != nil { fmt.Println("Error unmarshalling response body:", err) continue } } if err != nil { if err == io.EOF { fmt.Println("") break } panic(err) } } // 将用户的消息添加到历史记录中 history = append(history, struct { Role string `json:"role"` Content string `json:"content"` }{ Role: completionResponse.Message.Role, Content: resutlmessage,//completionResponse.Message.Content, // 假设用户的消息是第一个 }) } } |