1、全景图片的介绍

全景图通过广角的表现手段以及绘画、相片、视频、三维模型等形式,尽可能多表现出周围的环境。360全景,即通过对专业相机捕捉整个场景的图像信息或者使用建模软件渲染过后的图片,使用软件进行图片拼合,并用专门的播放器进行播放,即将平面照片或者计算机建模图片变为360 度全观,用于虚拟现实浏览,把二维的平面图模拟成真实的三维空间,呈现给观赏者。

2、如何实现

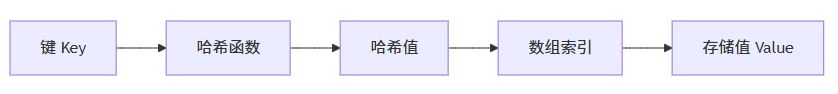

2.1、实现原理

2.2、实现代码

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

|

# -*- coding:utf-8 -*-u'''Created on 2019年6月14日@author: wuluo'''__author__ = 'wuluo'__version__ = '1.0.0'__company__ = u'重庆交大'__updated__ = '2019-06-14'import numpy as npimport cv2 as cvfrom PIL import Imagefrom matplotlib import pyplot as pltprint('cv version: ', cv.__version__)def pinjie(): top, bot, left, right = 100, 100, 0, 500 img1 = cv.imread('G:/2018and2019two/qianrushi/wuluo1.png') cv.imshow("img1", img1) img2 = cv.imread('G:/2018and2019two/qianrushi/wuluo2.png') cv.imshow("img2", img2) srcImg = cv.copyMakeBorder( img1, top, bot, left, right, cv.BORDER_CONSTANT, value=(0, 0, 0)) testImg = cv.copyMakeBorder( img2, top, bot, left, right, cv.BORDER_CONSTANT, value=(0, 0, 0)) img1gray = cv.cvtColor(srcImg, cv.COLOR_BGR2GRAY) img2gray = cv.cvtColor(testImg, cv.COLOR_BGR2GRAY) sift = cv.xfeatures2d_SIFT().create() # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1gray, None) kp2, des2 = sift.detectAndCompute(img2gray, None) # FLANN parameters FLANN_INDEX_KDTREE = 1 index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5) search_params = dict(checks=50) flann = cv.FlannBasedMatcher(index_params, search_params) matches = flann.knnMatch(des1, des2, k=2) # Need to draw only good matches, so create a mask matchesMask = [[0, 0] for i in range(len(matches))] good = [] pts1 = [] pts2 = [] # ratio test as per Lowe's paper for i, (m, n) in enumerate(matches): if m.distance < 0.7 * n.distance: good.append(m) pts2.append(kp2[m.trainIdx].pt) pts1.append(kp1[m.queryIdx].pt) matchesMask[i] = [1, 0] draw_params = dict(matchColor=(0, 255, 0), singlePointColor=(255, 0, 0), matchesMask=matchesMask, flags=0) img3 = cv.drawMatchesKnn(img1gray, kp1, img2gray, kp2, matches, None, **draw_params) #plt.imshow(img3, ), plt.show() rows, cols = srcImg.shape[:2] MIN_MATCH_COUNT = 10 if len(good) > MIN_MATCH_COUNT: src_pts = np.float32( [kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2) dst_pts = np.float32( [kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2) M, mask = cv.findHomography(src_pts, dst_pts, cv.RANSAC, 5.0) warpImg = cv.warpPerspective(testImg, np.array( M), (testImg.shape[1], testImg.shape[0]), flags=cv.WARP_INVERSE_MAP) for col in range(0, cols): if srcImg[:, col].any() and warpImg[:, col].any(): left = col break for col in range(cols - 1, 0, -1): if srcImg[:, col].any() and warpImg[:, col].any(): right = col break res = np.zeros([rows, cols, 3], np.uint8) for row in range(0, rows): for col in range(0, cols): if not srcImg[row, col].any(): res[row, col] = warpImg[row, col] elif not warpImg[row, col].any(): res[row, col] = srcImg[row, col] else: srcImgLen = float(abs(col - left)) testImgLen = float(abs(col - right)) alpha = srcImgLen / (srcImgLen + testImgLen) res[row, col] = np.clip( srcImg[row, col] * (1 - alpha) + warpImg[row, col] * alpha, 0, 255) # opencv is bgr, matplotlib is rgb res = cv.cvtColor(res, cv.COLOR_BGR2RGB) # show the result plt.figure() plt.imshow(res) plt.show() else: print("Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT)) matchesMask = Noneif __name__ == "__main__": pinjie() |

3、运行效果

原始的两张图:

效果图:

原始图,水杯没有处理好,导致此处效果不好。

原文链接:https://blog.csdn.net/qq_43433255/article/details/92211849

相关文章