postgresql使用dblink跨库增删改查

一、使用步骤

1、创建dblink扩展,连接与被连接的两个数据库都要执行下面sql

|

1

|

create extension if not exists dblink;

|

2、跨库查询或增删改

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

#查询

SELECT

*

FROM

dblink ( 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26', 'select user_id,account from piedss_biz.sys_user' ) AS T ( ID TEXT, NAME TEXT);

#新增

SELECT dblink_exec ( 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26', 'INSERT INTO piedss_biz.sys_user(user_id,account,password,sex,super_admin_flag,status_flag,del_flag,create_user,real_name) VALUES(''1588006895019589631'',''ericfrq'',''1qazWSX'',''F'',''Y'',''1'',''N'',''dms_datahub'',''管理员'') ' );

#将库A的数据查询出来后直接插入存库B

INSERT INTO dms_usercenter_userinfo ( ID, true_name, username, PASSWORD, phone ) SELECT

*

FROM

dblink ( 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26', 'SELECT sys_user.user_id,sys_user.real_name,sys_user.account,sys_user.password,sys_user.tel FROM piedss_biz.sys_user' ) AS T ( ID TEXT, true_name TEXT, username TEXT, PASSWORD TEXT, phone TEXT );

#修改

SELECT dblink_exec ( 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26', 'UPDATE piedss_biz.sys_user SET account=''ericfrq'',password=''1qazWSX'',sex=''F'',super_admin_flag=''Y'',status_flag=''1'',del_flag=''N'',create_user=''dms_datahub'',real_name=''管理员''WHERE user_id=''158800689501958963111''' );

#删除

SELECT dblink_exec ( 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26', 'DELETE FROM piedss_biz.sys_user WHERE user_id=''4028db8283d486350183d533f7570000'' AND create_user=''dms_datahub''' );

|

3、如果不想每一次都写完整的dblink连接信息,可以先起别名

|

1

2

3

4

5

6

|

#起别名

select dblink_connect('bieming', 'host=localhost port=5432 dbname=piedssdb_sort user=postgres password=qQq314159@26');

#进行操作

SELECT dblink_exec ( 'bieming', 'INSERT INTO piedss_biz.sys_user(user_id,account,password,sex,super_admin_flag,status_flag,del_flag,create_user,real_name) VALUES(''1588006895019589631'',''ericfrq'',''1qazWSX'',''F'',''Y'',''1'',''N'',''dms_datahub'',''管理员'') ' );

#关闭连接

SELECT dblink_disconnect('bieming');

|

4、补充:mybatis直接执行上面的sql写法

参考下面补充介绍:pgsql个人笔记,mybatis+postgresql写原生sql,不用xml

补充:pgsql个人笔记

一、mybatis+pgsql的xml

下面统计的sql中用到的聚合函数具体解析说明: 第一部分

- array_to_string( ARRAY_AGG ( stp.source_server ), ',' ): 将stp的source_server的数据转化为数组,再以逗号分隔拼接起来转成字符串。

- array_to_string( ARRAY_AGG ( stp.target_server ), ',' )将stp的target_server的数据转化为数组,再将数组转换为字符串,用“,”分隔。(有点类似于Mysql的group_concat()函数)

- concat_ws ( ',', 'a', 'b' ) :将ab以逗号连接。在下面的案例中:concat_ws ( ',', array_to_string( ARRAY_AGG ( stp.source_server ), ',' ), array_to_string( ARRAY_AGG ( stp.target_server ), ',' ) )是将第一步的两个结果,合并成一个字符串

- regexp_split_to_table((a,b),',' ) :将a,b以逗号分隔开并将a、b分别作为表查询的结果。在下面的案例中,regexp_split_to_table( ( concat_ws ( ',', array_to_string( ARRAY_AGG ( stp.source_server ), ',' ), array_to_string( ARRAY_AGG ( stp.target_server ), ',' ) ) ), ',' ) 将第二步的结果,以逗号“,”分隔,并把每一项作为结果

- DISTINCT将第三步的结果去重

- COUNT ( * )统计第四步去重后的数量

第二部分

- SUM ( stad.data_volume ),计算data_volume的和

- ROUND( '100' :: NUMERIC / 10, 3 )将100除以10后保留小数点后三位。其中:: NUMERIC将字符串’100’转为数字(numeric类型最多能存储有1000个数字位的数字并且能进行准确的数值计算。它主要用于需要准确地表示数字的场合,如货币金额。不过,对numeric 类型进行算术运算比整数类型和浮点类型要慢很多。)。案例中ROUND( SUM ( stad.data_volume ) :: NUMERIC / ( 1024 * 1024 * 1024 ), 3 )将第一步的结果转为字符串并除以1024的三次方(将字节B转–>kb–>mb–>GB)

- CAST(oti.institution_id AS VARCHAR) 将int型institution_id转为varchar型

- 将时间字段格式化为指定格式to_char(create_time,'yyyy-mm-dd')

mybatis+postgresql写原生sql,不用xml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

@Select({"${sqlStr}"})

@Results({

@Result(column = "gid", property = "gid", jdbcType = JdbcType.INTEGER, id = true),

@Result(column = "name", property = "name", jdbcType = JdbcType.VARCHAR),

@Result(column = "geom", property = "geom", jdbcType = JdbcType.VARCHAR),

@Result(column = "code", property = "code", jdbcType = JdbcType.VARCHAR)

})

List<ModelPolygon> exeNativeSql(@Param("sqlStr") String sqlStr);

@Select({"${sqlStr}"})

List<ModelPolygon> exeNativeSql(@Param("sqlStr") String sqlStr);

//"select gid as gid,name as name,ST_AsGeoJson(geom) as geom,code as code from wl_model_polygon"

|

整个dao层的写法:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

package com.xxx.mapper;

import com.alibaba.fastjson.JSONObject;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import com.xxx.entity.UserInfo;

import org.apache.ibatis.annotations.*;

import org.apache.ibatis.type.JdbcType;

import java.util.List;

@Mapper

public interface UserInfoMapper extends BaseMapper<UserInfo> {

@Select({"${sqlStr}"})

@Results({

@Result(column = "id", property = "id", jdbcType = JdbcType.VARCHAR, id = true),

@Result(column = "username", property = "username", jdbcType = JdbcType.VARCHAR),

@Result(column = "email", property = "email", jdbcType = JdbcType.VARCHAR),

@Result(column = "phone", property = "phone", jdbcType = JdbcType.VARCHAR),

@Result(column = "password", property = "password", jdbcType = JdbcType.VARCHAR),

@Result(column = "true_name", property = "trueName", jdbcType = JdbcType.VARCHAR),

@Result(column = "usetime", property = "usetime", jdbcType = JdbcType.VARCHAR)

})

List<UserInfo> exeNativeQuerySql(@Param("sqlStr") String sqlStr);

@Select({"${sqlStr}"})

List<JSONObject> exeNativeExecSql(@Param("sqlStr") String sqlStr);

}

|

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

|

<!--数据量统计 -->

<select id="getDataByParams" resultType="com.htht.datatrans.app.vo.CountProtocolVO">

SELECT

*

FROM

(

SELECT COUNT

( * ) AS useNode

FROM

(

SELECT DISTINCT

regexp_split_to_table(

(

concat_ws ( ',', array_to_string( ARRAY_AGG ( stp.source_server ), ',' ), array_to_string( ARRAY_AGG ( stp.target_server ), ',' ) )

),

','

)

FROM

sync_t_protocol AS stp

WHERE

stp.deleted = 0

AND stp.protocol_type = 'data communication'

) res

) node1,

(

SELECT COUNT

( * ) AS runningNode

FROM

(

SELECT DISTINCT

regexp_split_to_table(

(

concat_ws ( ',', array_to_string( ARRAY_AGG ( stp.source_server ), ',' ), array_to_string( ARRAY_AGG ( stp.target_server ), ',' ) )

),

','

)

FROM

sync_t_protocol AS stp

WHERE

stp.deleted = 0

AND stp.protocol_type = 'data communication'

AND stp.run_state = 'running'

) res

) node2,

( SELECT COUNT ( * ) AS protocolTotal FROM sync_t_protocol AS stp WHERE stp.deleted = 0 AND stp.protocol_type = 'data communication' ) protocol1,

(

SELECT COUNT

( * ) AS runningProtocol

FROM

sync_t_protocol AS stp

WHERE

stp.deleted = 0

AND stp.protocol_type = 'data communication'

AND stp.run_state = 'running'

) protocol2,

(

SELECT COUNT

( * ) AS exceptionalProtocol

FROM

sync_t_protocol AS stp

WHERE

stp.deleted = 0

AND stp.protocol_type = 'data communication'

AND stp.run_state = 'exception'

) protocol3,

(

SELECT

ROUND( SUM ( stad.data_volume ) :: NUMERIC / ( 1024 * 1024 * 1024 ), 3 ) AS runningData

FROM

sync_t_action_detail AS stad

INNER JOIN sync_t_protocol AS stp ON stad.protocol_id = stp.protocol_id

AND stp.deleted = 0

AND protocol_type = 'data communication'

WHERE

stad.execute_state = ANY ( STRING_TO_ARRAY( 'running', ',' ) )

) data1,

(

SELECT

ROUND( SUM ( stad.data_volume ) :: NUMERIC / ( 1024 * 1024 * 1024 ), 3 ) AS historyData

FROM

sync_t_action_detail AS stad

INNER JOIN sync_t_protocol AS stp ON stad.protocol_id = stp.protocol_id

AND stp.deleted = 0

AND protocol_type = 'data communication'

WHERE

stad.execute_state = ANY ( STRING_TO_ARRAY( 'succeed,failed', ',' ) )

) data2

</select>

<select

id="getPagesByParams"

resultType="com.htht.datatrans.app.vo.CloudVO">

select * from ops_t_cloud where delete=0

<if test="cloudProvider != null and cloudProvider != ''">

and cloud_provider like '%'||#{cloudProvider,jdbcType=VARCHAR}||'%'

</if>

order by cloud_id

</select>

<select

id="getByCloudCodes"

resultType="com.htht.datatrans.app.entity.Cloud">

select * from ops_t_cloud where delete=0

<if test="cloudCodes != null and cloudCodes != ''">

and cloud_code = ANY(STRING_TO_ARRAY(#{cloudCodes,jdbcType=VARCHAR}, ','))

</if>

order by cloud_id

</select>

<select

id="getPagesByParams"

resultType="org.springblade.modules.datatrans.vo.ServerPageVO">

select ots.*,otc.cloud_name as cloudName,otc.domain_name as domainName

from ops_t_server ots

inner join ops_t_cloud otc on ots.cloud_id = otc.cloud_id

<if test="institutionId != null and institutionId != ''">

inner join ops_t_institution oti ON CAST(oti.institution_id AS VARCHAR) = ots.institution_id

</if>

where ots.deleted=0

<if test="cloudProvider != null and cloudProvider != ''">

and otc.cloud_provider like concat(concat('%',#{cloudProvider,jdbcType=VARCHAR}),'%')

</if>

order by ots.server_id

</select>

|

二、字符串替换

将address字段里的 “区” 替换为 “呕” 显示,如下

|

1

2

|

select *,replace(address,'区','呕') AS rep

from test_tb

|

将name字段里的 “我” 替换为 “你” 保存,如下

|

1

2

|

UPDATE blade_visual

SET "name" = ( REPLACE ( NAME, '你', '你们三' ) )

|

三、postgre做空间数据分析

比如面相交

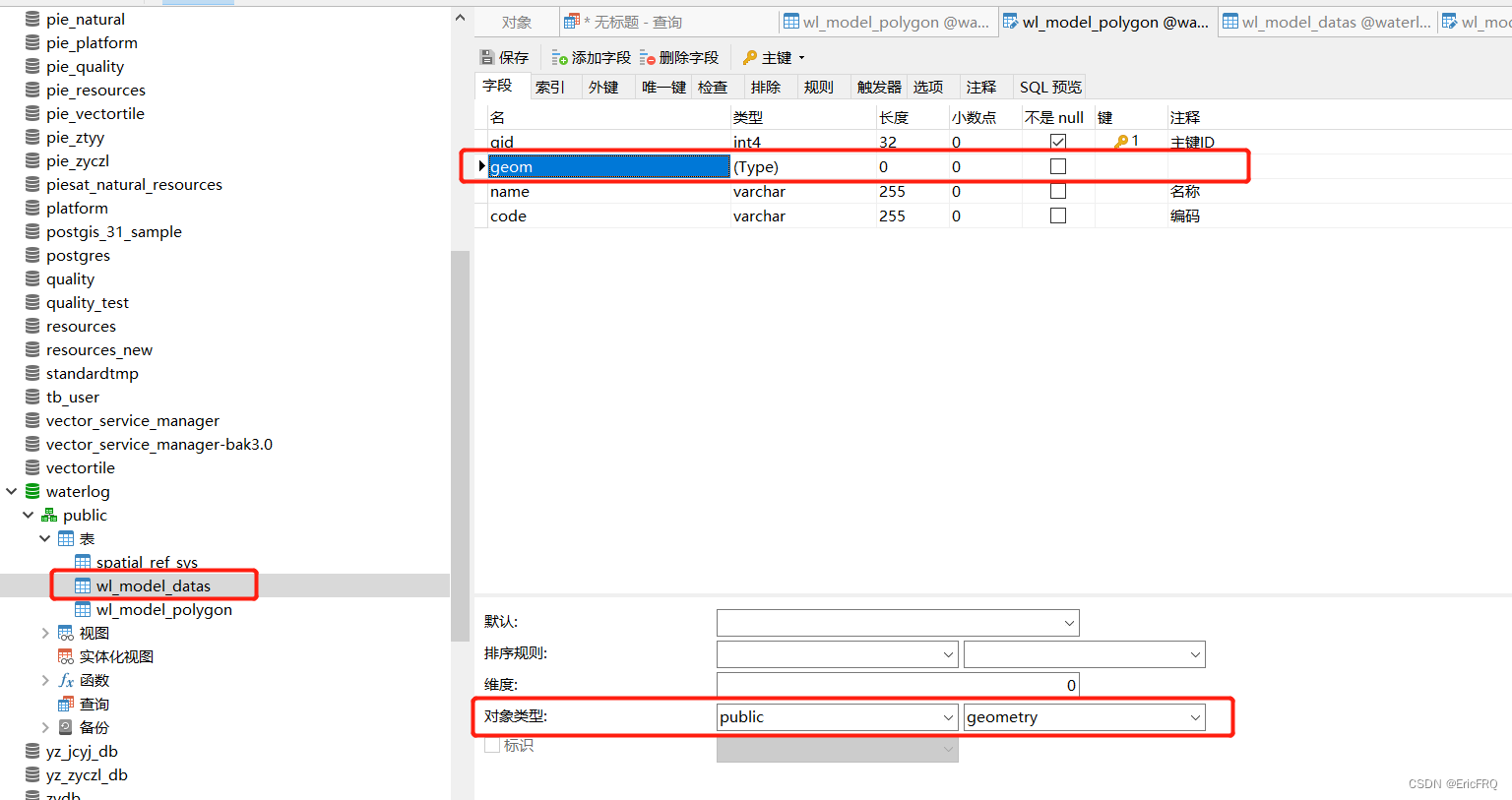

1、使用步骤 新建空间索引create extension postgis;创建geometry类型字段

3.插入geometry数据

|

1

2

3

4

|

insert into wl_model_polygon(geom,name,code) values ('SRID=4326;POLYGON ((116.2078857421875 39.928694653732364, 116.20925903320312 39.91078961774283, 116.20651245117188 39.89393354266699, 116.23397827148436 39.86547951378614, 116.24496459960938 39.82752244475985, 116.29852294921876 39.78954439311165, 116.3397216796875 39.78532331459258, 116.3836669921875 39.78848914776114, 116.41799926757811 39.79904087286648, 116.444091796875 39.80748108746673, 116.45919799804688 39.818029898770206, 116.48117065429686 39.83490462943255, 116.50314331054688 39.86231722624386, 116.50588989257812 39.88023492849342, 116.5045166015625 39.90973623453719, 116.4935302734375 39.925535281697286, 116.5045166015625 39.94975340768179, 116.47979736328125 39.98132938627215, 116.47567749023438 39.99395569397331, 116.45507812500001 40.000267972646796, 116.43859863281249 40.000267972646796, 116.4166259765625 39.998163944585805, 116.36581420898438 40.00868343656941, 116.35208129882812 40.00447583427404, 116.30264282226562 40.01078714046552, 116.27792358398436 39.999215966720165, 116.24771118164061 39.99500778093748, 116.23260498046874 39.990799335838034, 116.21200561523438 39.95606977009003, 116.2078857421875 39.928694653732364))

','产流区单元','1');

insert into wl_model_polygon(geom,name) values ('SRID=4326;POLYGON ((118.76382985390228 30.94145000894207, 118.76367454479498 30.941584547525736, 118.76350796485406 30.941783659824637, 118.76339844820404 30.941924731032316, 118.76330916107543 30.942036894992782, 118.76327040751187 30.94208876002824, 118.76320401397413 30.942103072784164, 118.76311833308432 30.942151844969032, 118.76297412628924 30.94233241273298, 118.76284033474406 30.942507490217793, 118.76274061465483 30.942508998759877, 118.76272709824036 30.942414705157432, 118.76260312963427 30.941400575247428, 118.76246246134042 30.940958834692708, 118.76241983918237 30.940824987759868, 118.76235477020532 30.94068130925791, 118.76232222882629 30.940647540114867, 118.76293788696353 30.940087796711964, 118.76307156743417 30.939971500356137, 118.76327063857775 30.93979831612114, 118.7635558539929 30.939541452438277, 118.7637265129556 30.93939848398361, 118.76377770256443 30.939355600092142, 118.76441910672565 30.9388159785355, 118.76463064154075 30.938667159236218, 118.76495341070222 30.938493604345012, 118.76523672506141 30.938409477348614, 118.7654197381786 30.9383707434975, 118.76582985307277 30.938323591604444, 118.76622053407164 30.9382963001612, 118.76643330279228 30.938318107809664, 118.7664801815057 30.938337017341382, 118.76652477352764 30.938350675989682, 118.7666582796586 30.938456597505137, 118.76673673369658 30.938603248874927, 118.76677236100761 30.938782266531803, 118.76684549711081 30.939149764149192, 118.76701632885761 30.93988929949859, 118.7670376347395 30.939981532336844, 118.7664187768753 30.94010020307178, 118.76614981686157 30.940150404326346, 118.7658940991671 30.940243370814187, 118.76569247579346 30.940342755588517, 118.76556089310861 30.940412552128976, 118.76552036966268 30.940466789099446, 118.76550573912039 30.940574355758315, 118.76551217968313 30.941150469586262, 118.76551098575817 30.941290908017095, 118.76550989936004 30.941418699044846, 118.76542260756776 30.94141695016964, 118.76499121731501 30.941408306476433, 118.76391937007008 30.94138581330907, 118.76382985390228 30.94145000894207))','产流区单元');

|

4.pg库清空数据和主键自增

|

1

2

3

|

TRUNCATE TABLE wl_model_polygon;

TRUNCATE wl_model_polygon RESTART IDENTITY;

|

5.相交分析sql

|

1

2

3

4

|

select gid,name,ST_AsGeoJson(geom) as geom from wl_model_polygon t where ST_Intersects(t.geom,ST_GeomFromGeoJSON('{"type":"Polygon","coordinates":[[[118.78355107920095,30.938155072659868],[118.78134774048146,30.939763084116294],[118.7812957819458,30.93972376187253],[118.78286595934765,30.93838280705404],[118.7833158576293,30.93793078253492],[118.78355013577584,30.938153972966006],[118.78355107920095,30.938155072659868]]]}

'))

select gid,name,ST_AsGeoJson(geom) as geom from wl_model_polygon t where ST_Intersects(t.geom,ST_GeomFromGeoJSON('{"type":"Polygon","coordinates":[[[118.78355107920095,30.938155072659868],[118.78134774048146,30.939763084116294],[118.7812957819458,30.93972376187253],[118.78286595934765,30.93838280705404],[118.7833158576293,30.93793078253492],[118.78355013577584,30.938153972966006],[118.78355107920095,30.938155072659868]]]}'))

|

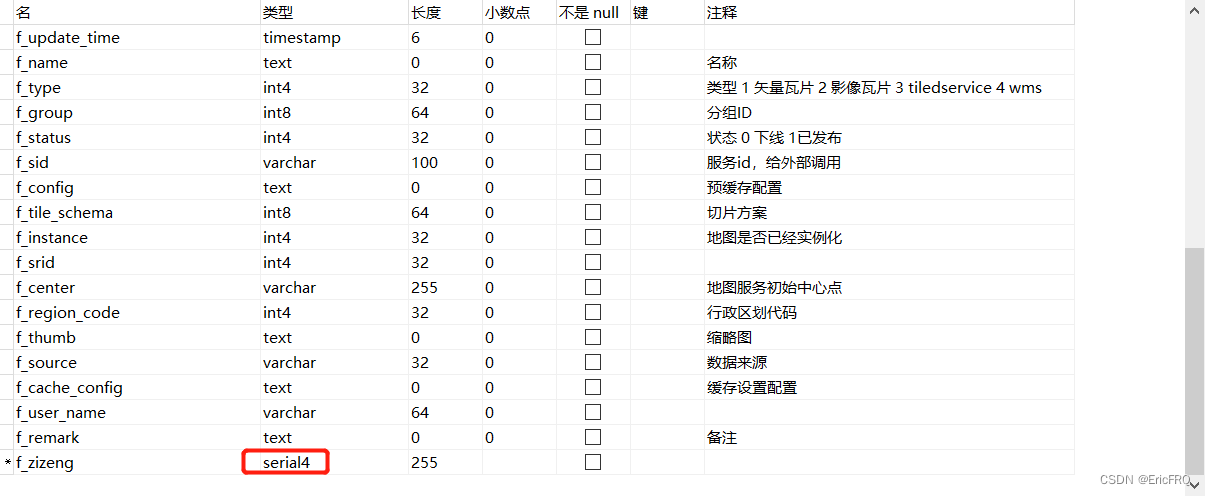

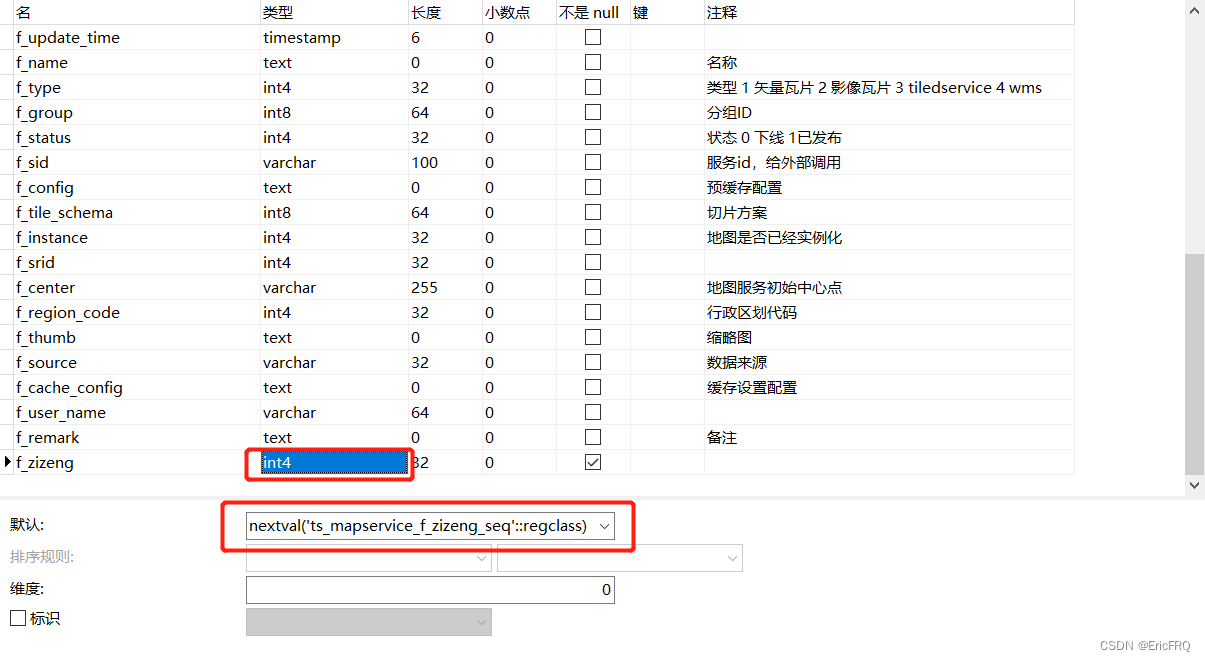

四、自增序列

1、navicat创建自增字段

设置为serial4类型

保存后自动加序列

2、重置自增序列号为指定数值

第一步:select pg_get_serial_sequence('ts_mapservice', 'f_remark');查看序列为public.ts_mapservice_f_remark_seq

第二步:更新序列值ALTER SEQUENCE public.ts_mapservice_f_remark_seq RESTART WITH 8;

或者直接初始化自增数值:TRUNCATE TABLE wl_model_polygon; TRUNCATE wl_model_polygon RESTART IDENTITY;

|